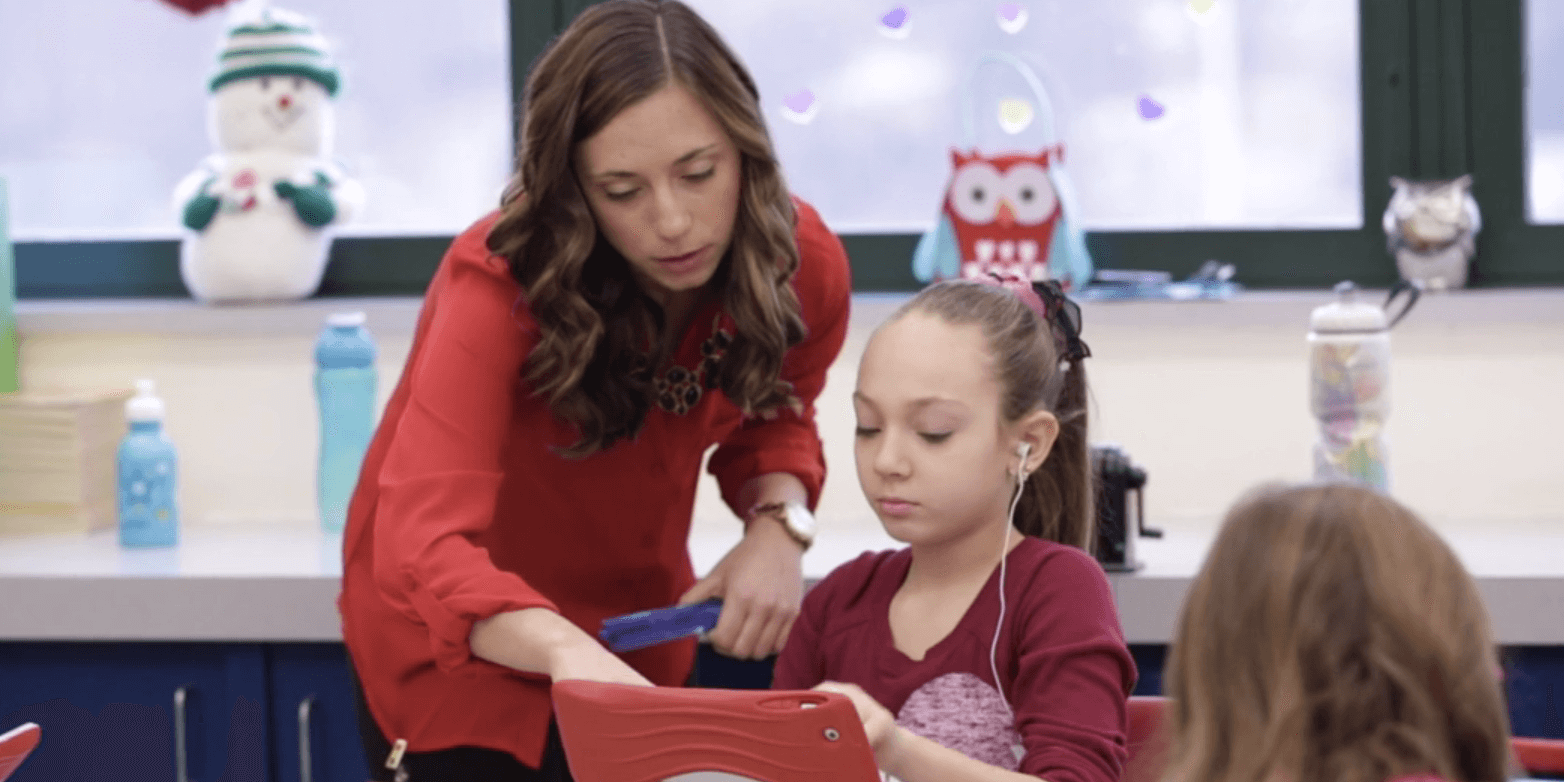

At eSpark, we believe that student agency is a powerful tool for learning. Our Choice Texts feature allows students to personalize their reading lessons by selecting characters, settings, and other story elements that reflect their interests and backgrounds. This customization fosters engagement and a deeper, more personal connection to reading. However, with this level of personalization comes the responsibility to ensure that all content remains appropriate for the classroom.

Our Approach to Content Moderation

Ensuring student safety is our highest priority. To that end, we have built a multi-layered content moderation system that proactively prevents inappropriate or off-topic content from being created by students as they personalize their reading passages.

Our context-aware approach to text moderation uses Hive’s advanced classification system. As students work to personalize their Choice Texts, our models keep an eye out for multiple categories of content.

The categories that lead to a story being flagged include:

- Sexual Content

- Hate Speech

- Violence and Threats

- Bullying and Harassment

- Child Safety and Exploitation

- Self-Harm and Suicide

- Drugs and Weapons

- Spam and Promotions

- Presence of Minors

- Gibberish and Redirection

Here’s how each layer of our moderation technology works:

- Prompt Filtering: As students enter their Choice Texts inputs one by one, our moderation engine scans each submission in real-time against a dynamic list of blocked words and phrases. If a student input is deemed inappropriate, it is immediately rejected before it can be used to generate a story. This list is continuously updated to reflect evolving language trends and emerging risks.

- Contextual Review: While individual words can be harmless, certain combinations can lead to unintended meanings. Our moderation tools analyze students’ texts holistically to reject problematic content that only becomes apparent once the full story is put together.

- AI-Powered Content Review: Our AI is designed to not only block inappropriate content, but also to guide students toward safe and educational storylines. When students attempt to create a story with an inappropriate prompt, the AI recognizes this and shifts the focus of the story, transforming it into a more wholesome narrative while maintaining student engagement. This proactive approach ensures that student creativity remains positive while still reducing the likelihood of inappropriate material.

- Human Oversight: While AI plays a key role in proactive content moderation, our team of humans regularly reviews flagged stories and refines our filtering based on new learnings.

Continuous Improvement in Moderation

We recognize that no moderation system is perfect, and we are always working to make ours stronger.

Recently, we saw an instance where a Choice Text story, generated from individually harmless prompts, took on unintended meaning due to evolving cultural context. A year ago, this same story may have seemed merely odd but innocuous; however, current events gave it a new, problematic connotation. While this was an isolated case, it reinforced the need for ongoing vigilance and refinement of our moderation processes. As a result, we have taken the following actions:

- Expanded our filter list with additional words and phrases to capture emerging trends in language and culture.

- Reviewed and removed any potentially problematic Choice Text stories to ensure compliance with our standards.

- Enhanced AI pattern recognition to better detect issues with story content and refined our response strategies.

- Paused the Classroom Library feature to allow time for a full review of student-generated content and to put in additional safeguards.

Our Commitment to Student Safety

At eSpark, we are committed to providing a learning environment that is safe, engaging, and empowering for all students. We will continue refining our moderation processes and implementing the latest advancements in AI and human oversight to ensure that Choice Texts remain a fun and appropriate way for students to engage with reading.

We appreciate the trust that educators and families place in us, and we are dedicated to continuous improvement. If you have any questions or concerns about how we handle content moderation, please don’t hesitate to reach out.