☝️ This seems like a simple question. Given how much airspace ChatGPT has taken up in K-12 conversations lately, one might even assume the answer is a given. Can students use ChatGPT? More like “can I stop them even if I wanted to,” amiright?

The answer is not as cut and dried as you might think. Here’s everything you need to know about the age requirements for the most popular large language models (LLMs), parental consent and content filtering considerations, and—crucially—the difference between using LLMs directly and using products that leverage their models for specific purposes.

Background

Remember the chaos when ChatGPT was released to the public and it felt like traditional writing assignments became obsolete overnight? The backlash was swift—schools and districts banned the technology outright while admitting that they would need to figure out how to coexist with this next technological revolution.

Predictably, other tech giants followed in the footsteps of Microsoft and OpenAI, each seeking to carve out an early slice of the exploding generative AI market. Students can now ostensibly choose between ChatGPT, Google Bard, Microsoft’s Bing (which uses GPT4 to have conversations with its users), and countless apps that are little more than a minimal shell on top of the most popular LLMs. Apple will follow soon with what it’s calling Ajax GPT. Facebook has launched a “smaller, more performant” model known as LLaMa.

These things are everywhere at this point, and they’re getting better at an exponential rate, but there is still a lot of confusion around who can use them and when.

What Do the Terms of Use Say?

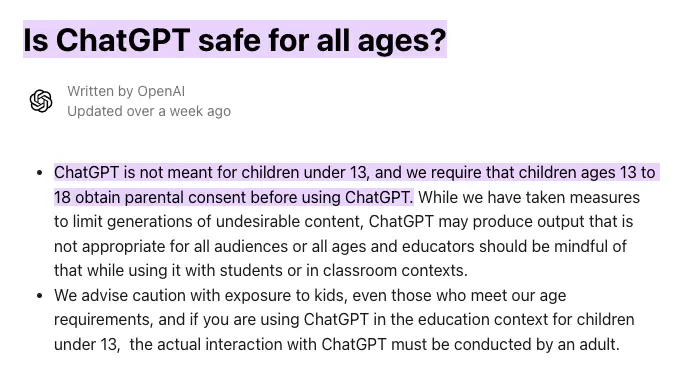

ChatGPT

Minimum 13 years old; parental consent for 13-18

When ChatGPT was first introduced to the public, the Terms of Use required users to be at least 18 years old. That number changed in the spring of 2023 to 13, with an additional requirement for parental consent for those 18 and under. They also explicitly “advise caution with exposure to kids, even those who meet our age requirements…”

In a nod to potential applications for schools and teachers, OpenAI adds, “If you are using ChatGPT in the education context for children under 13, the actual interaction with ChatGPT must be conducted by an adult.”

Google Bard

Minimum 18 years old

Bard currently has the strictest terms of use regarding minors accessing the application. “To use Bard, you must be 18 or over. You also need a personal Google Account that you manage on your own, or a Google Workspace account for which your admin enabled access to Bard.” Bard is the least likely LLM to be used directly in the classroom, given these requirements and the prevalence of Google Workspaces in US school districts,.

Bing Chat

Minimum 13 years old

Bing is arguably the most accessible and least restrictive way for students to access the equivalent of an AI-powered chatbot. The lower age requirement has not gone unnoticed, either. Microsoft President Brad Smith was grilled In a September subcommittee hearing by Senator Josh Hawley, who pointed to examples of ways in which conversations could be harmful to minors. Smith pushed back, citing the educational benefits of the technology. We likely haven’t seen the last of that debate.

What Do K-12 Technology Leaders Say?

The situation on the ground is not as straightforward as merely adhering to the terms of use for these models. The issue is so complex that most district leaders still don’t have a consistent approach to age-related guidelines and recommendations.

One of the primary issues at play is the Children’s Internet Protection Act (CIPA). As school CIO Tony DePrato points out in his guest post for K12Digest, “Here in the USA, if a school receives E-rate funding from the government, they have a requirement to filter content.” That requirement comes from CIPA, which states that schools and libraries must certify that they have implemented policies to block or filter Internet access to “content harmful to minors.”

Even in schools that do not necessarily rely on E-rate funding, filtering and moderation of content is a high-priority moral, ethical, and, yes, legal concern. Unfettered access to large language models carries significant risk—not only that students may be exposed to misinformation and hallucinations, but also that they might find a way around the minimal filters that do exist.

To make the matter even more challenging, those tasked with creating policies often don’t have a lot of visibility into everything their students are interacting with in the classroom. As longtime school district CTO and current Executive Director of the Indiana CTO Council, Pete Just, said in a recent webinar, district technology leaders often have “no idea what web apps our teachers are using.”

No matter how much effort district technology departments put into vetting, monitoring, and centralizing resources, much of the work lies in training teachers to be aware of and comply with FERPA, COPPA, and other laws governing student data privacy, security, and parental consent.

The Difference Between Direct and Indirect Access

If ChatGPT says kids under 13 should not use their product, and kids under 18 need explicit parental consent, and we don’t have any way to guarantee that students won’t be exposed to harmful content, what are we even doing here? Did New York City and the many other districts that banned ChatGPT last year have the right idea all along?

This is where it’s very important to distinguish between allowing students to access large language models directly versus using products that leverage those models to build “AI-powered/enabled/supported” tools. “Can my students use ChatGPT?” is not necessarily the same question as “Can my students use any program that uses ChatGPT?”

For the non-technical reader, the way most generative AI functionality is built into other platforms can best be described as adding a customized outer layer of code and user experience designed to get ChatGPT or another LLM to fulfill a specific kind of request in a specific kind of way. Platforms use what is known as an Application Programming Interface (API) to send prompts to LLMs and take in the responses to those prompts.

The API alleviates some of the privacy and security concerns that come with direct access to ChatGPT, namely due to the fact that any data sent through the API is not shared and does not become a part of the model’s training set. Practically speaking, this means you don’t have to worry about your students sharing sensitive data that can never be retrieved once it’s out in the ether.

The other half of this discussion, content moderation and age-appropriateness, is going to vary widely from one vendor to another. This is entirely dependent on the quality of the prompts the vendor is sending to the LLMs, the automated moderation tools those vendors have in place to catch inappropriate content before it goes in and after it comes out, and the human feedback loops they have in place to catch and correct anything the robots missed.

So…Can Students Use ChatGPT or Not?

Unless you’re teaching older students (13+), and have documented parental consent, and have informed parents of your inability to filter content, and are ok with the potential for sensitive information to be shared, and have otherwise checked all the boxes of your internal use policies, the answer is no, your students should not be using ChatGPT directly.

That said, you 100% should be exploring ways to leverage the power of generative AI for good in your classroom with apps or programs that are made specifically for kids and address all of the reasons why ChatGPT by itself is not a good solution. The underlying technology opens up a whole new world of opportunities for differentiated learning, personalized content, and personalized remediation that just weren’t possible before.

As always, keep your tech department in the loop—they can’t give you guidance if they don’t know what you’re using. Any AI-powered tools should also meet the same standards that you would hold any learning program to for accessibility, privacy, security, age-appropriateness, and instructional value.

Educators who have been around for a while will all tell you the same thing—generative AI feels very new and unique right now, but we’ve been through this cycle before. As long as we are intentional, smart, and transparent about how it fits into the learning environment, everything will be ok.

Want to stay in the loop on the topic of AI in schools? Subscribe to EdTech Evolved today for monthly newsletter updates and breaking news.